Training

It’s not about training

It’s not about training

It’s not really about training. It’s all about learning. And that means putting the learner at the centre of the event, and being really clear about what learning is supposed to be taking place, and how that learning relates to and builds on their prior experience, and how they will use what they learn in the future.

And that means that if you want to monitor the results of the training, you are going to need something a bit more substantial than a smily-face feedback sheet.

It’s definitely not about powerpoint

We all know that people learn by doing – but creating a learning environment in which people learn through practising, getting things wrong, improving them, getting them better – this requires a degree of reflection and effort that many trainers appear not to be bothered with. The result is death by powerpoint. I don’t allow that.

It is about partnership

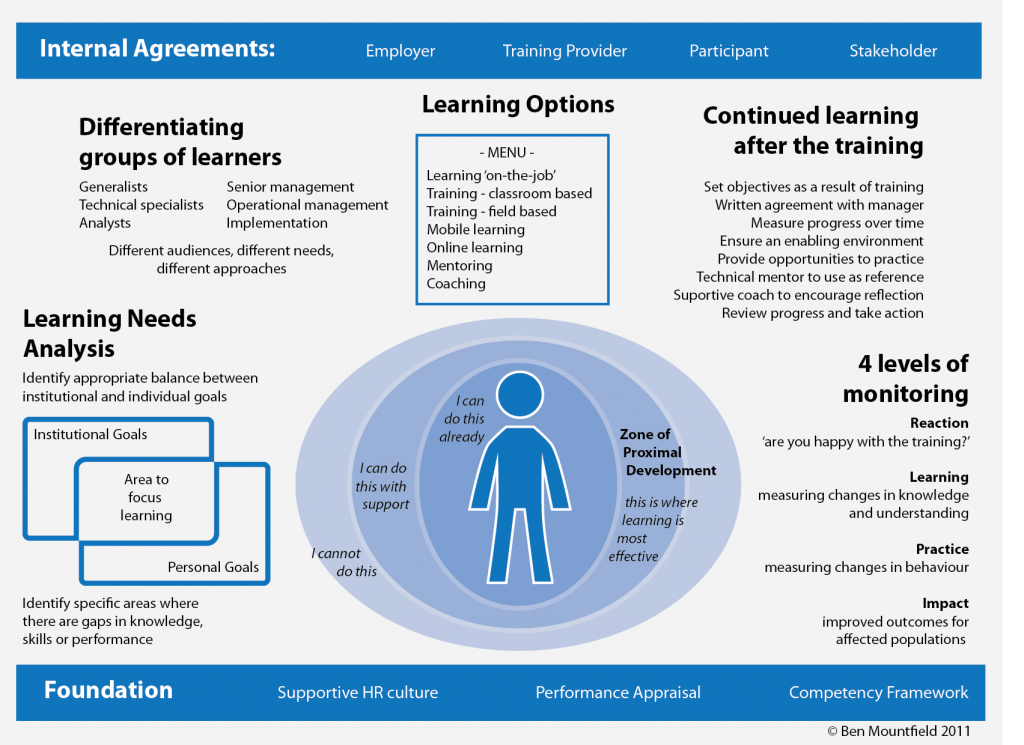

This infographic shows a good-practice adult learning environment, with the learner at the centre. Along the top we see the agreements between the key stakeholders; along the bottom, the foundation on which the learning environment rests.

This infographic shows a good-practice adult learning environment, with the learner at the centre. Along the top we see the agreements between the key stakeholders; along the bottom, the foundation on which the learning environment rests.

Five areas of practice surround the learner – five areas in which those commissioning the training and the facilitator must work together to ensure high quality and appropriate outcomes. If any of these areas are missing, the opportunities for learning are undermined.

Immediately surrounding the central learner, there is reference to the level of the course content: it must provide a scaffolding to stretch the learner, allowing them to grow and develop. Like Goldilocks’ porridge: not too hot and not too cold.

And it is about reflection and improvement

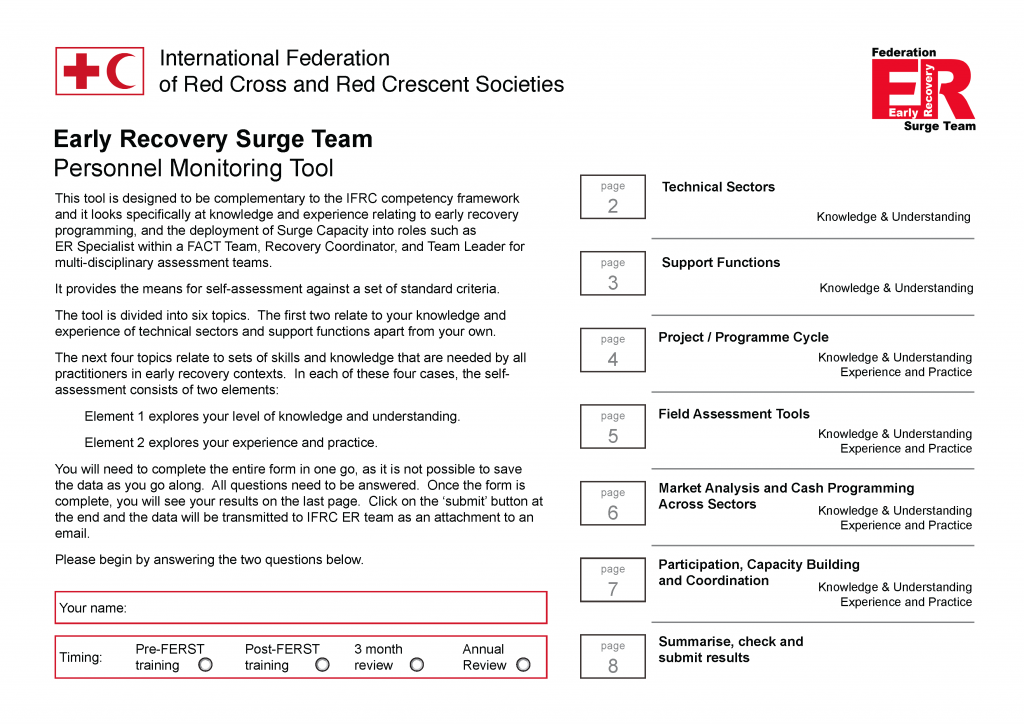

It’s critical that as practitioners we are willing to practise what we preach – to develop meaningful monitoring tools and use them to course-correct and ensure we deliver, and effective evaluation tools which help us to improve. The bottom right section of the previous infographic shows Kirkpatrick’s four levels of evaluation – reapplied to the learning environment. Here is the front page of a comprehensive, interactive PDF self-assessment tool used before and at the end of the FERST training, again after a few months, and finally after a year – which considers both content learning and practical application, and which covers the first three of Kirkpatrick’s four levels.

It’s critical that as practitioners we are willing to practise what we preach – to develop meaningful monitoring tools and use them to course-correct and ensure we deliver, and effective evaluation tools which help us to improve. The bottom right section of the previous infographic shows Kirkpatrick’s four levels of evaluation – reapplied to the learning environment. Here is the front page of a comprehensive, interactive PDF self-assessment tool used before and at the end of the FERST training, again after a few months, and finally after a year – which considers both content learning and practical application, and which covers the first three of Kirkpatrick’s four levels.

The traditional smiley face evaluation, by comparison, covers only the first (and doesn’t usually do that very well)

the following consultancies all involved training and learning

Response Option Analysis – Sindh Province, Pakistan

2017 – Facilitator for FAO and the Food Security Working Group

Conduct a response option analysis to consider responses to floods and drought in Jamshoro, Umerkot and Tharparkar districts of Sindh Province, Pakistan

Training: practical emergency cash transfer programming, for the Red Cross Movement: PECT I, II and III

2014, 2015 – Lead facilitator – IFRC and the Red Cross Movement.

Main tasks: Design new training package for using cash transfers in emergencies, lead facilitation in three training events, revise materials through the pilot phase, continuous adaptation to developing cash transfer toolkit.

Innovation: new approach to practical exercises related to cleaning beneficiary lists; managing feedback and complaints

Pakistan Simulation Exercise: country-wide flooding

2013 – Team Leader – for IFRC and the Pakistan RC

Tasks: Design of simulation materials; management of facilitation team; facilitation of 3 day event; lead lesson learning workshop and document.

Cash Transfer Programming

2012 – Sole consultant for IFRC

Tasks: revision of online CTP training; training delivery; development of internal advocacy materials on CTP

Regional Disaster Response Teams Skills Development Review

2012 – Sole consultant – for IFRC South Asia

Key question: how can the training and development of RDRT members be made most effective?

Federation Early Recovery Surge Team III, Malaysia

2012 – Lead facilitator – for IFRC and National Societies

Tasks: Review of materials, reprisal of FERST training with stronger emphasis on multi-sectoral, cash, markets and response option analysis.

Review of CTP training materials and monitoring tools

2011 – Sole consultant – for CaLP

Key tasks: consult trainers and participants, review monitoring tools, recommend changes to approach, content and monitoring

Review of the Economic Security training programme

2011 – Team Leader – for ICRC

Tasks: review the training programme, consider alignment with revised strategy, and recommend improvements

Technical support to cash transfer programming research projects

2011 – Sole consultant – for CaLP

Key tasks: Selection and management of consultants for research projects into contingency planning and market assessment; review of toolkit materials.

Household Economic Security Training II

2010 – Lead facilitator, handover – for BRCS

Key tasks: Revise curriculum, and facilitate second round of training; produce full training pack

Household Economic Security Training

2009 – Course design and lead facilitator – for British Red Cross

Task: Develop and lead the training for members of the BRC HES roster

Federation Early Recovery Surge Team II, Uganda

2009 – Course design, lead facilitator – for IFRC

Key tasks: further develop training model and facilitate second iteration

Training: Cash Transfers in Emergencies

2009 – Technical Lead, Co-facilitator – for American Red Cross and IFRC

Brief: Develop and co-facilitate training in CTP for members of ARC’s emergency roster and other relief professionals

Sub-contract: DRM curriculum design

2009 – Advisor.

Key tasks: Develop outline DRR and DRM curriculum for a regional development bank

Federation Early Recovery Surge Team

2008 – Course designer and lead facilitator – for IFRC

Key tasks: develop concept, design and facilitate a new training course, including simulation and fieldwork, for senior recovery practitioners to be deployed in sudden onset emergencies; identify appropriate communities and locations for course to run; develop materials, learn and improve.

Innovation: participatory evaluation tools – practicing what we preach